Sony has announced two new intelligent vision sensors, the first camera sensors to boast AI processing functionality.

Being the world’s first sensors with AI, their pending release could herald the rise of the AI-equipped camera that will offer a range of new functionality.

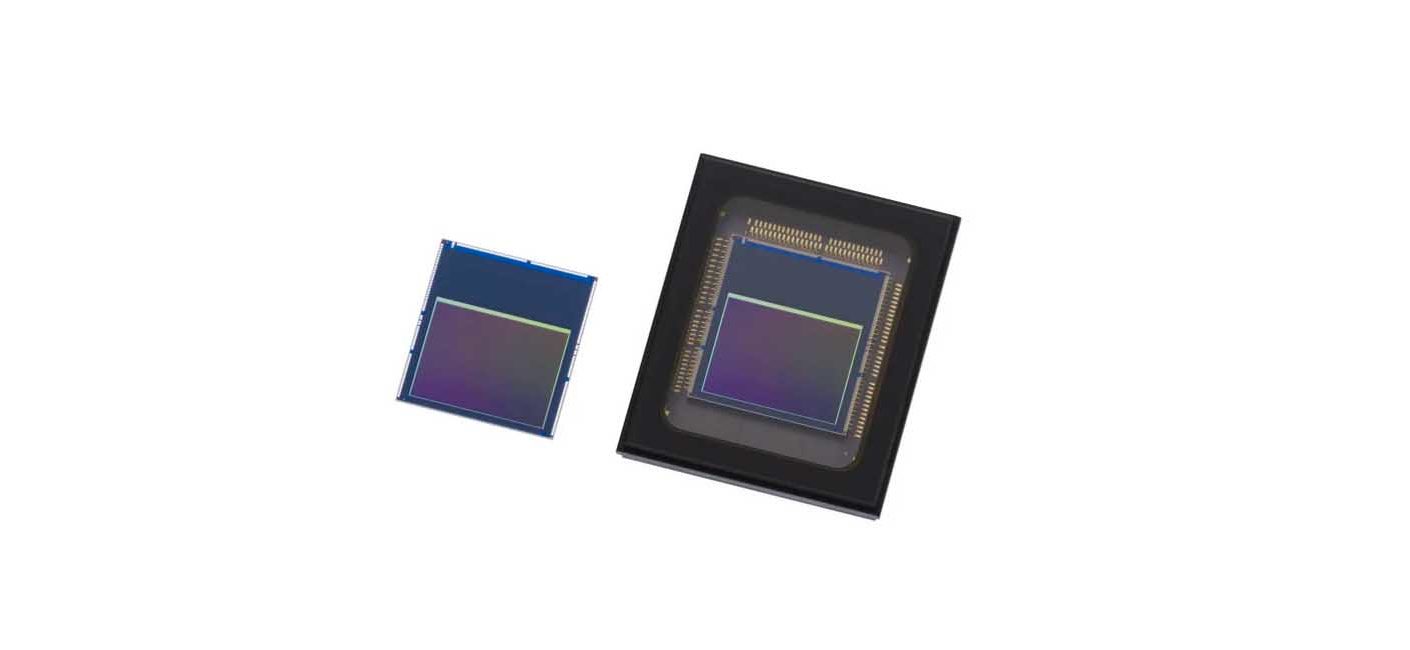

Shipping now is the IMX500 1/2.3-type intelligent vision sensor offering 12.3 effective megapixels. Out in June is the IMX501 1/2.3-type 12.3 effective megapixel intelligent vision sensor.

Each sensor is back-illuminated and features a stacked design consisting of a pixel chip and a logic chip.

The logic chip is equipped with Sony’s original DSP (Digital Signal Processor) dedicated to AI signal processing, and memory for the AI model. This configuration eliminates the need for high-performance processors or external memory, making it ideal for AI systems. According to Sony:

Signals acquired by the pixel chip are run through an ISP (Image Signal Processor) and AI processing is done in the process stage on the logic chip, and the extracted information is output as metadata, reducing the amount of data handled. Ensuring that image information is not output helps to reduce security risks and address privacy concerns. In addition to the image recorded by the conventional image sensor, users can select the data output format according to their needs and uses, including ISP format output images (YUV/RGB) and ROI (Region of Interest) specific area extract images.

When a video is recorded using a conventional image sensor, it is necessary to send data for each individual output image frame for AI processing, resulting in increased data transmission and making it difficult to deliver real-time performance. The new sensor products from Sony perform ISP processing and high-speed AI processing (3.1 milliseconds processing for MobileNet V1*2) on the logic chip, completing the entire process in a single video frame. This design makes it possible to deliver high-precision, real-time tracking of objects while recording video.

Users can write the AI models of their choice to the embedded memory and can rewrite and update it according to its requirements or the conditions of the location where the system is being used. For example, when multiple cameras employing this product are installed in a retail location, a single type of camera can be used with versatility across different locations, circumstances, times, or purposes. When installed at the entrance to the facility it can be used to count the number of visitors entering the facility; when installed on the shelf of a store it can be used to detect stock shortages; when on the ceiling it can be used for heat mapping store visitors (detecting locations where many people gather), and the like. Furthermore, the AI model in a given camera can be rewritten from one used to detect heat maps to one for identifying consumer behavior, and so on.